C# is still a popular backend programming language, and you might find yourself in need of it for scraping a web page (or multiple pages). In this article, we will cover scraping with C# using an HTTP request, parsing the results, and then extracting the information that you want to save. This method is common with basic scraping, but you will sometimes come across single-page web applications built in JavaScript such as Node.js, which require a different approach. We’ll also cover scraping these pages using PuppeteerSharp, Selenium WebDriver, and Headless Chrome.

Note: This article assumes that the reader is familiar with C# syntax and HTTP request libraries. The PuppeteerSharp and Selenium WebDriver .NET libraries are available to make integration of Headless Chrome easier for developers. Also, this project is using .NET Core 3.1 framework and the HTML Agility Pack for parsing raw HTML.

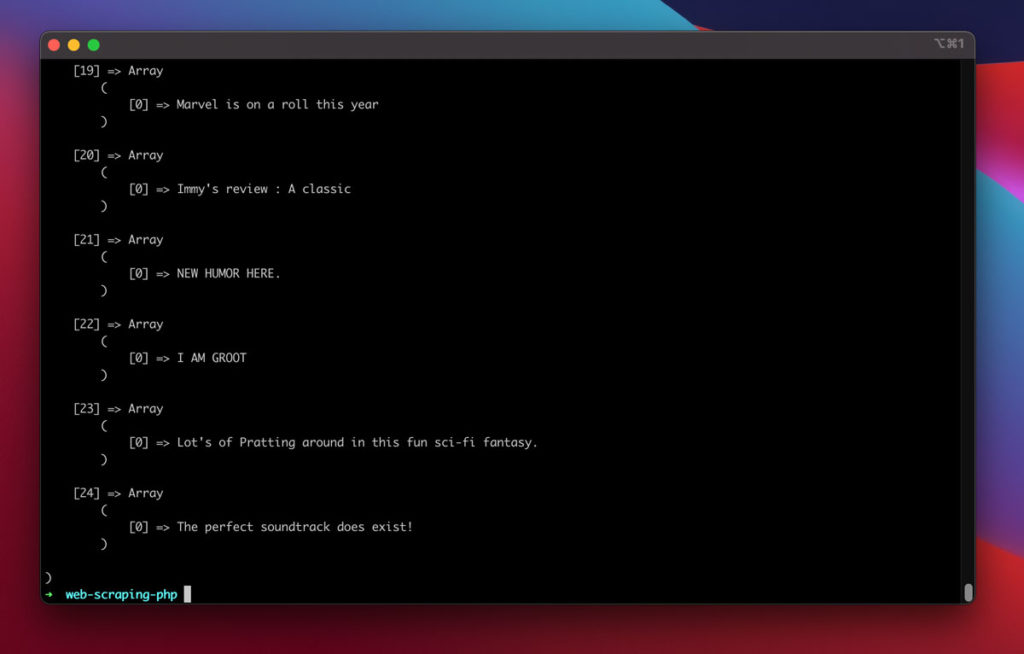

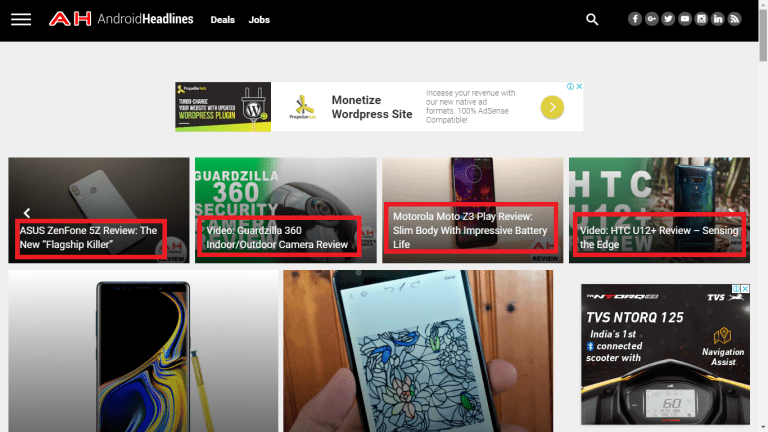

This video covers pulling HTML elements from the DOM programmatically using PHP.If you want to do one of the following actions:- Receive 1 on 1 mentoring fro. Learn web scraping with C# with this step-by-step tutorial covering the must-known C# web-scraping tools and libraries. Jennifer Marsh 05 October, 2020 12 min read Jennifer Marsh is a software developer and technology writer for a number of publications across several industries including cybersecurity, programming, DevOps, and IT operations. Web Scraping Using PHP We will explore some PHP libraries which can be used to understand how to use the HTTP protocol as far as our PHP code is concerned, how we can steer clear of the built-in API wrappers and in its place, think of using something that is way more simple and easy to manage for web scraping.

Part I: Static Pages

Setup

However, I learned about some new tools in the last two sections, and web scraping is a significant part of my day job's business (though I don't work on that part of it). We mostly use Scrapy (python) but have a sizable PHP stack as well. PHP & Web Scraping Projects for $30 - $250. Php web crolling, scrapping I want to get the most recent value (red box) of the site in the attachment every 5 minutes 1,081,269 2021-04.

If you’re using C# as a language, you probably already use Visual Studio. This article uses a simple .NET Core Web Application project using MVC (Model View Controller). After you create a new project, go to the NuGet Package Manager where you can add the necessary libraries used throughout this tutorial.

In NuGet, click the “Browse” tab and then type “HTML Agility Pack” to find the dependency.

Install the package, and then you’re ready to go. This package makes it easy to parse the downloaded HTML and find tags and information that you want to save.

Finally, before you get started with coding the scraper, you need the following libraries added to the codebase:

Making an HTTP Request to a Web Page in C#

Imagine that you have a scraping project where you need to scrape Wikipedia for information on famous programmers. Wikipedia has a page with a list of famous programmers with links to each profile page. You can scrape this list and add it to a CSV file (or Excel spreadsheet) to save for future review and use. This is just one simple example of what you can do with web scraping, but the general concept is to find a site that has the information you need, use C# to scrape the content, and store it for later use. In more complex projects, you can crawl pages using the links found on a top category page.

Using .NET HTTP Libraries to Retrieve HTML

.NET Core introduced asynchronous HTTP request libraries to the framework. These libraries are native to .NET, so no additional libraries are needed for basic requests. Before you make the request, you need to build the URL and store it in a variable. Because we already know the page that we want to scrape, a simple URL variable can be added to the HomeController’s Index() method. The HomeController Index() method is the default call when you first open an MVC web application.

Add the following code to the Index() method in the HomeController file:

Using .NET HTTP libraries, a static asynchronous task is returned from the request, so it’s easier to put the request functionality in its own static method. Add the following method to the HomeController file:

Let’s break down each line of code in the above CallUrl() method.

This statement creates an HttpClient variable, which is an object from the native .NET framework.

If you get HTTPS handshake errors, it’s likely because you are not using the right cryptographic library. The above statement forces the connection to use the TLS 1.3 library so that an HTTPS handshake can be established. Note that TLS 1.3 is deprecated but some web servers do not have the latest 2.0+ libraries installed. For this basic task, cryptographic strength is not important but it could be for some other scraping requests involving sensitive data.

This statement clears headers should you decide to add your own. For instance, you might scrape content using an API request that requires a Bearer authorization token. In such a scenario, you would then add a header to the request. For example:

The above would pass the authorization token to the web application server to verify that you have access to the data. Next, we have the last two lines:

These two statements retrieve the HTML content, await the response (remember this is asynchronous) and return it to the HomeController’s Index() method where it was called. The following code is what your Index() method should contain (for now):

The code to make the HTTP request is done. We still haven’t parsed it yet, but now is a good time to run the code to ensure that the Wikipedia HTML is returned instead of any errors. Make sure you set a breakpoint in the Index() method at the following line:

This will ensure that you can use the Visual Studio debugger UI to view the results.

You can test the above code by clicking the “Run” button in the Visual Studio menu:

Visual Studio will stop at the breakpoint, and now you can view the results.

If you click “HTML Visualizer” from the context menu, you can see a raw HTML view of the results, but you can see a quick preview by just hovering your mouse over the variable. You can see that HTML was returned, which means that an error did not occur.

Parsing the HTML

With the HTML retrieved, it’s time to parse it. HTML Agility Pack is a common tool, but you may have your own preference. Even LINQ can be used to query HTML, but for this example and for ease of use, the Agility Pack is preferred and what we will use.

Before you parse the HTML, you need to know a little bit about the structure of the page so that you know what to use as markers for your parsing to extract only what you want and not every link on the page. You can get this information using the Chrome Inspect function. In this example, the page has a table of contents links at the top that we don’t want to include in our list. You can also take note that every link is contained within an <li> element.

From the above inspection, we know that we want the content within the “li” element but not the ones with the tocsection class attribute. With the Agility Pack, we can eliminate them from the list.

We will parse the document in its own method in the HomeController, so create a new method named ParseHtml() and add the following code to it:

In the above code, a generic list of strings (the links) is created from the parsed HTML with a list of links to famous programmers on the selected Wikipedia page. We use LINQ to eliminate the table of content links, so now we just have the HTML content with links to programmer profiles on Wikipedia. We use .NET’s native functionality in the foreach loop to parse the first anchor tag that contains the link to the programmer profile. Because Wikipedia uses relative links in the href attribute, we manually create the absolute URL to add convenience when a reader goes into the list to click each link.

Exporting Scraped Data to a File

The code above opens the Wikipedia page and parses the HTML. We now have a generic list of links from the page. Now, we need to export the links to a CSV file. We’ll make another method named WriteToCsv() to write data from the generic list to a file. The following code is the full method that writes the extracted links to a file named “links.csv” and stores it on the local disk.

The above code is all it takes to write data to a file on local storage using native .NET framework libraries.

The full HomeController code for this scraping section is below.

Part II: Scraping Dynamic JavaScript Pages

In the previous section, data was easily available to our scraper because the HTML was constructed and returned to the scraper the same way a browser would receive data. Newer JavaScript technologies such as Vue.js render pages using dynamic JavaScript code. When a page uses this type of technology, a basic HTTP request won’t return HTML to parse. Instead, you need to parse data from the JavaScript rendered in the browser.

Dynamic JavaScript isn’t the only issue. Some sites detect if JavaScript is enabled or evaluate the UserAgent value sent by the browser. The UserAgent header is a value that tells the web server the type of browser being used to access pages (e.g. Chrome, FireFox, etc). If you use web scraper code, no UserAgent is sent and many web servers will return different content based on UserAgent values. Some web servers will use JavaScript to detect when a request is not from a human user.

You can overcome this issue using libraries that leverage Headless Chrome to render the page and then parse the results. We’re introducing two libraries freely available from NuGet that can be used in conjunction with Headless Chrome to parse results. PuppeteerSharp is the first solution we use that makes asynchronous calls to a web page. The other solution is Selenium WebDriver, which is a common tool used in automated testing of web applications.

Using PuppeteerSharp with Headless Chrome

For this example, we will add the asynchronous code directly into the HomeController’s Index() method. This requires a small change to the default Index() method shown in the code below.

In addition to the Index() method changes, you must also add the library reference to the top of your HomeController code. Before you can use Puppeteer, you first must install the library from NuGet and then add the following line in your using statements:

Now, it’s time to add your HTTP request and parsing code. In this example, we’ll extract all URLs (the <a> tag) from the page. Add the following code to the HomeController to pull the page source in Headless Chrome, making it available for us to extract links (note the change in the Index() method, which replaces the same method in the previous section example):

Similar to the previous example, the links found on the page were extracted and stored in a generic list named programmerLinks. Notice that the path to chrome.exe is added to the options variable. If you don’t specify the executable path, Puppeteer will be unable to initialize Headless Chrome.

Using Selenium with Headless Chrome

If you don’t want to use Puppeteer, you can use Selenium WebDriver. Selenium is a common tool used in automation testing on web applications, because in addition to rendering dynamic JavaScript code, it can also be used to emulate human actions such as clicks on a link or button. To use this solution, you need to go to NuGet and install Selenium.WebDriver and (to use Headless Chrome) Selenium.WebDriver.ChromeDriver. Note: Selenium also has drivers for other popular browsers such as FireFox.

Add the following library to the using statements:

Now, you can add the code that will open a page and extract all links from the results. The following code demonstrates how to extract links and add them to a generic list.

Notice that the Selenium solution is not asynchronous, so if you have a large pool of links and actions to take on a page, it will freeze your program until the scraping completes. This is the main difference between the previous solution using Puppeteer and Selenium.

Conclusion

Web scraping is a powerful tool for developers who need to obtain large amounts of data from a web application. With pre-packaged dependencies, you can turn a difficult process into only a few lines of code.

One issue we didn’t cover is getting blocked either from remote rate limits or blocks put on bot detection. Your code would be considered a bot by some applications that want to limit the number of bots accessing data. Our web scraping API can overcome this limitation so that developers can focus on parsing HTML and obtaining data rather than determining remote blocks.

Web crawling is a powerful technique to collect data from the web by finding all the URLs for one or multiple domains. Python has several popular web crawling libraries and frameworks.

In this article, we will first introduce different crawling strategies and use cases. Then we will build a simple web crawler from scratch in Python using two libraries: requests and Beautiful Soup. Next, we will see why it’s better to use a web crawling framework like Scrapy. Finally, we will build an example crawler with Scrapy to collect film metadata from IMDb and see how Scrapy scales to websites with several million pages.

What is a web crawler?

Web crawling and web scraping are two different but related concepts. Web crawling is a component of web scraping, the crawler logic finds URLs to be processed by the scraper code.

A web crawler starts with a list of URLs to visit, called the seed. For each URL, the crawler finds links in the HTML, filters those links based on some criteria and adds the new links to a queue. All the HTML or some specific information is extracted to be processed by a different pipeline.

Web crawling strategies

In practice, web crawlers only visit a subset of pages depending on the crawler budget, which can be a maximum number of pages per domain, depth or execution time.

Most popular websites provide a robots.txt file to indicate which areas of the website are disallowed to crawl by each user agent. The opposite of the robots file is the sitemap.xml file, that lists the pages that can be crawled.

Popular web crawler use cases include:

- Search engines (Googlebot, Bingbot, Yandex Bot…) collect all the HTML for a significant part of the Web. This data is indexed to make it searchable.

- SEO analytics tools on top of collecting the HTML also collect metadata like the response time, response status to detect broken pages and the links between different domains to collect backlinks.

- Price monitoring tools crawl e-commerce websites to find product pages and extract metadata, notably the price. Product pages are then periodically revisited.

- Common Crawl maintains an open repository of web crawl data. For example, the archive from October 2020 contains 2.71 billion web pages.

Next, we will compare three different strategies for building a web crawler in Python. First, using only standard libraries, then third party libraries for making HTTP requests and parsing HTML and finally, a web crawling framework.

Building a simple web crawler in Python from scratch

To build a simple web crawler in Python we need at least one library to download the HTML from a URL and an HTML parsing library to extract links. Python provides standard libraries urllib for making HTTP requests and html.parser for parsing HTML. An example Python crawler built only with standard libraries can be found on Github.

The standard Python libraries for requests and HTML parsing are not very developer-friendly. Other popular libraries like requests, branded as HTTP for humans, and Beautiful Soup provide a better developer experience. You can install the two libraries locally.

A basic crawler can be built following the previous architecture diagram.

The code above defines a Crawler class with helper methods to download_url using the requests library, get_linked_urls using the Beautiful Soup library and add_url_to_visit to filter URLs. The URLs to visit and the visited URLs are stored in two separate lists. You can run the crawler on your terminal.

The crawler logs one line for each visited URL.

The code is very simple but there are many performance and usability issues to solve before successfully crawling a complete website.

- The crawler is slow and supports no parallelism. As can be seen from the timestamps, it takes about one second to crawl each URL. Each time the crawler makes a request it waits for the request to be resolved and no work is done in between.

- The download URL logic has no retry mechanism, the URL queue is not a real queue and not very efficient with a high number of URLs.

- The link extraction logic doesn’t support standardizing URLs by removing URL query string parameters, doesn’t handle URLs starting with #, doesn’t support filtering URLs by domain or filtering out requests to static files.

- The crawler doesn’t identify itself and ignores the robots.txt file.

Next, we will see how Scrapy provides all these functionalities and makes it easy to extend for your custom crawls.

Web crawling with Scrapy

Scrapy is the most popular web scraping and crawling Python framework with 40k stars on Github. One of the advantages of Scrapy is that requests are scheduled and handled asynchronously. This means that Scrapy can send another request before the previous one is completed or do some other work in between. Scrapy can handle many concurrent requests but can also be configured to respect the websites with custom settings, as we’ll see later.

Scrapy has a multi-component architecture. Normally, you will implement at least two different classes: Spider and Pipeline. Web scraping can be thought of as an ETL where you extract data from the web and load it to your own storage. Spiders extract the data and pipelines load it into the storage. Transformation can happen both in spiders and pipelines, but I recommend that you set a custom Scrapy pipeline to transform each item independently of each other. This way, failing to process an item has no effect on other items.

On top of all that, you can add spider and downloader middlewares in between components as it can be seen in the diagram below.

Scrapy Architecture Overview [source]

If you have used Scrapy before, you know that a web scraper is defined as a class that inherits from the base Spider class and implements a parse method to handle each response. If you are new to Scrapy, you can read this article for easy scraping with Scrapy.

Scrapy also provides several generic spider classes: CrawlSpider, XMLFeedSpider, CSVFeedSpider and SitemapSpider. The CrawlSpider class inherits from the base Spider class and provides an extra rules attribute to define how to crawl a website. Each rule uses a LinkExtractor to specify which links are extracted from each page. Next, we will see how to use each one of them by building a crawler for IMDb, the Internet Movie Database.

Building an example Scrapy crawler for IMDb

Before trying to crawl IMDb, I checked IMDb robots.txt file to see which URL paths are allowed. The robots file only disallows 26 paths for all user-agents. Scrapy reads the robots.txt file beforehand and respects it when the ROBOTSTXT_OBEY setting is set to true. This is the case for all projects generated with the Scrapy command startproject.

This command creates a new project with the default Scrapy project folder structure.

Then you can create a spider in scrapy_crawler/spiders/imdb.py with a rule to extract all links.

You can launch the crawler in the terminal.

You will get lots of logs, including one log for each request. Exploring the logs I noticed that even if we set allowed_domains to only crawl web pages under https://www.imdb.com, there were requests to external domains, such as amazon.com.

IMDb redirects from URLs paths under whitelist-offsite and whitelist to external domains. There is an open Scrapy Github issue that shows that external URLs don’t get filtered out when the OffsiteMiddleware is applied before the RedirectMiddleware. To fix this issue, we can configure the link extractor to deny URLs starting with two regular expressions.

Rule and LinkExtractor classes support several arguments to filter out URLs. For example, you can ignore specific URL extensions and reduce the number of duplicate URLs by sorting query strings. If you don’t find a specific argument for your use case you can pass a custom function to process_links in LinkExtractor or process_values in Rule.

For example, IMDb has two different URLs with the same content.

To limit the number of crawled URLs, we can remove all query strings from URLs with the url_query_cleaner function from the w3lib library and use it in process_links.

Now that we have limited the number of requests to process, we can add a parse_item method to extract data from each page and pass it to a pipeline to store it. For example, we can either extract the whole response.text to process it in a different pipeline or select the HTML metadata. To select the HTML metadata in the header tag we can code our own XPATHs but I find it better to use a library, extruct, that extracts all metadata from an HTML page. You can install it with pip install extract.

I set the follow attribute to True so that Scrapy still follows all links from each response even if we provided a custom parse method. I also configured extruct to extract only Open Graph metadata and JSON-LD, a popular method for encoding linked data using JSON in the Web, used by IMDb. You can run the crawler and store items in JSON lines format to a file.

Php Web Scraping Javascript

The output file imdb.jl contains one line for each crawled item. For example, the extracted Open Graph metadata for a movie taken from the <meta> tags in the HTML looks like this.

The JSON-LD for a single item is too long to be included in the article, here is a sample of what Scrapy extracts from the <script type='application/ld+json'> tag.

Exploring the logs, I noticed another common issue with crawlers. By sequentially clicking on filters, the crawler generates URLs with the same content, only that the filters were applied in a different order.

Long filter and search URLs is a difficult problem that can be partially solved by limiting the length of URLs with a Scrapy setting, URLLENGTH_LIMIT.

I used IMDb as an example to show the basics of building a web crawler in Python. I didn’t let the crawler run for long as I didn’t have a specific use case for the data. In case you need specific data from IMDb, you can check the IMDb Datasets project that provides a daily export of IMDb data and IMDbPY, a Python package for retrieving and managing the data.

Php Web Scraping Table

Web crawling at scale

If you attempt to crawl a big website like IMDb, with over 45M pages based on Google, it’s important to crawl responsibly by configuring the following settings. You can identify your crawler and provide contact details in the BOT_NAME setting. To limit the pressure you put on the website servers you can increase the DOWNLOAD_DELAY, limit the CONCURRENT_REQUESTS_PER_DOMAIN or set AUTOTHROTTLE_ENABLED that will adapt those settings dynamically based on the response times from the server.

Notice that Scrapy crawls are optimized for a single domain by default. If you are crawling multiple domains check these settings to optimize for broad crawls, including changing the default crawl order from depth-first to breath-first. To limit your crawl budget, you can limit the number of requests with the CLOSESPIDER_PAGECOUNT setting of the close spider extension.

With the default settings, Scrapy crawls about 600 pages per minute for a website like IMDb. To crawl 45M pages it will take more than 50 days for a single robot. If you need to crawl multiple websites it can be better to launch separate crawlers for each big website or group of websites. If you are interested in distributed web crawls, you can read how a developer crawled 250M pages with Python in 40 hours using 20 Amazon EC2 machine instances.

In some cases, you may run into websites that require you to execute JavaScript code to render all the HTML. Fail to do so, and you may not collect all links on the website. Because nowadays it’s very common for websites to render content dynamically in the browser I wrote a Scrapy middleware for rendering JavaScript pages using ScrapingBee’s API.

Conclusion

We compared the code of a Python crawler using third-party libraries for downloading URLs and parsing HTML with a crawler built using a popular web crawling framework. Scrapy is a very performant web crawling framework and it’s easy to extend with your custom code. But you need to know all the places where you can hook your own code and the settings for each component.

Configuring Scrapy properly becomes even more important when crawling websites with millions of pages. If you want to learn more about web crawling I suggest that you pick a popular website and try to crawl it. You will definitely run into new issues, which makes the topic fascinating!

Sources